Destroying customer trust one small screen at time, and what do verifiable credentials really verify?

Plus: A new kind of DPI: ‘Digital *Private* Infrastructure’, and we urgently need the opposite of today’s version of the Internet

Hi everyone, thanks for coming back to Customer Futures.

Each week I unpack the disruptive shifts around Empowerment Tech. Digital wallets, Personal AI and new digital customer relationships.

If you haven’t yet signed up, why not subscribe:

Hi folks,

When I first saw this picture, I assumed it was AI-generated. But it’s real, from 1920.

It’s the ‘Autoped’, an early motorised scooter produced from 1915 to 1921. With e-scooters now everywhere, it was an idea ahead of its time.

But a century ago, the Autoped failed in the market.

Why?

Because:

It wasn’t suitable for the rough roads of the 1920s. The Autoped didn’t feel safe or practical.

‘Urban mobility’ wasn’t a thing yet. Almost everyone just walked or used public transport.

The range and reliability were limited. You couldn’t go very fast, or very far.

It wasn’t very useful. You couldn’t carry other people or things (like a car), nor was it very flexible or light (like a bike).

It’s easier to say this:

Being early is the same as being wrong.

About ten years ago, Bill Gross carried out a brilliant study into startups. Comparing hundreds of companies that came out of Y Combinator and similar ‘accelerators’ in the US over the previous decade.

He wanted to find out what made a successful startup.

They measured a whole bunch of things about the businesses. From the quality of the idea and the skills in the team, to the levels of investment and the size of market opportunity.

But one thing stood out. It had a disproportionate impact on the revenues, market cap and long-term success of the startup.

It was timing.

Who and what else was in the market at the time. How much customers knew they needed the product. How much infrastructure was in place. And of course, what was the public mood and culture at the time, to accept and adopt it.

Lots of things need to be true for a new technology business to break through.

And with the latest boom in ‘e-scooters’, we can now see how those other market conditions have finally caught up with the Autoped:

The infrastructure is now in place. Our roads and distribution networks can now cope with lightweight motorised scooters.

We’ve had an explosion of urban growth. Sprawling suburbs and cities desperately need smart, clean and easy ways to get around.

Battery tech and safety tech are now well advanced. Further, faster, lighter, safer.

And perhaps most importantly, it’s become culturally acceptable to be seen zooming around on one of these things.

Here’s the point.

“A great idea alone isn’t enough. It needs the right moment to thrive.”

With AI, the tech has been around for decades. And it’s had more than a few ‘AI winters’. So what’s different this time?

The conditions are now in place for adoption at scale.

AI is having its ‘breakout’ moment because we now have the distribution (cloud), the right tech at the right price point (LLMs), and new demand (via the chat interface).

So let’s look again at Empowerment Tech.

Data stores, digital wallets and the cryptography behind them have been around for over 30 years. Are the market conditions now shifting, falling into place, for the adoption of ET at scale?

We now have distribution (SaaS), the right tech at the right price point (AI agents), and new demand (via smart devices). Plus a new cultural backdrop around privacy and personal data. About data sovereignty and data security.

There’s something in the air at the moment. It’s hard to describe.

From what I can see today - the stealth projects working on digital ID wallets, the advances in Personal AI, the energised teams, the disruptive commercial models, and the new, ripe distribution paths for verifiable credentials - we can say that the early versions of Empowerment Tech are already here.

They’re just not evenly distributed. Yet.

So I’m excited.

But.

Everyone I know working at the frontier of all this - the bleeding edge - is struggling to keep up with how fast it’s all moving. And how fast it’s all arriving into the market. Especially the production-grade AI tools, well-beyond flashy demos in a lab.

Yes, the timing of a new tech must be right. But what ‘timing’ means is now changing. The speed of change - of innovation - is breathtaking. Tech adoption is getting faster.

“Being early is the same as being wrong.”

I’m wondering if ‘being early’ now just means having to wait a few months.

As ever, it’s all about understanding the future of being a digital customer. So welcome back to the Customer Futures newsletter.

In this week’s edition:

What do verifiable credentials verify?

We urgently need the opposite of today’s version of the Internet

Destroying customer trust one small screen at a time

As AI Agents learn to do more, we’re going to need a new kind of DPI: ‘Digital Private Infrastructure’

… and much more

So grab your coffee or fruit smoothie of choice, a comfy chair, and Let’s Go.

What do verifiable credentials verify?

Another excellent post from Steve (Lockstep) Wilson:

“In any digital transformation, it is not the new technology that creates the most cost, delay and risk; rather it’s the business process changes.

“The greatest benefit of verifiable credentials is they can conserve the meaning of the IDs we are all familiar with, and all the underlying business rules.

“The real power of VCs lies not in what they change but what they leave the same!

“A minimalist verifiable credential carrying a government ID means nothing more and nothing less than the fact that the holder has been issued that ID. By keeping things simple, a VC avoids disturbing familiar trusted ways of dealing with people and businesses.

“Powerful digital wallets are being rapidly embraced by consumers; modern web services are able to receive credentials from standards-based devices.

“We are ready to transform all important IDs from plaintext to verifiable credentials. Most people now could present any important verified data with a click in an app, with the same convenience, speed and safety as showing a payment card.

“With no change to backend processes and credentialing, we would cut deep into identity crime and defuse the black market in stolen data.”

A brilliant breakdown of what credentials really are, why they matter, and what we can do with them quickly.

The answer, of course, is to make data instantly verifiable.

And when you give that digital superpower to individuals - of data portability - a new digital economy can begin to shape around people.

Digital value can be unleashed with and for the individual. Not just by and for business.

We urgently need the opposite of today’s version of the Internet

John Battelle has been looking at how the ‘agentic web’ might emerge.

More specifically, he’s asking how we can avoid it emerging from ‘the belly of the technological beasts we’ve already created’:

“The answer is both simple and maddeningly complex. But at its core, it would require one big change in how all of us interact with technology platforms.

“In short, we need to invert the model of control from the center (the platform) to the edge (each of us).

“It sounds simple, but it’s the opposite of how nearly everything works in today’s version of the Internet.

“But it’s the only model of computing and social organization that can deliver the fantastical promises of today’s tech elite. And if they want to remain in their elite positions, they’re going to have to help us deliver it.”

It’s a must-read. In fact, most of what John writes should be on your weekly reading list. His other belters include:

We Dream of Genies – But Who Will They Work For?

Our Data Governance Is Broken. Let’s Reinvent It.

John’s main point is so important I’ll say it again:

If we want AI to work for the good of people, we’re going to need the opposite of how nearly everything works in today’s version of the Internet.

It’s time to turn everything inside out.

It’s time for Empowerment Tech.

Destroying customer trust one small screen at a time

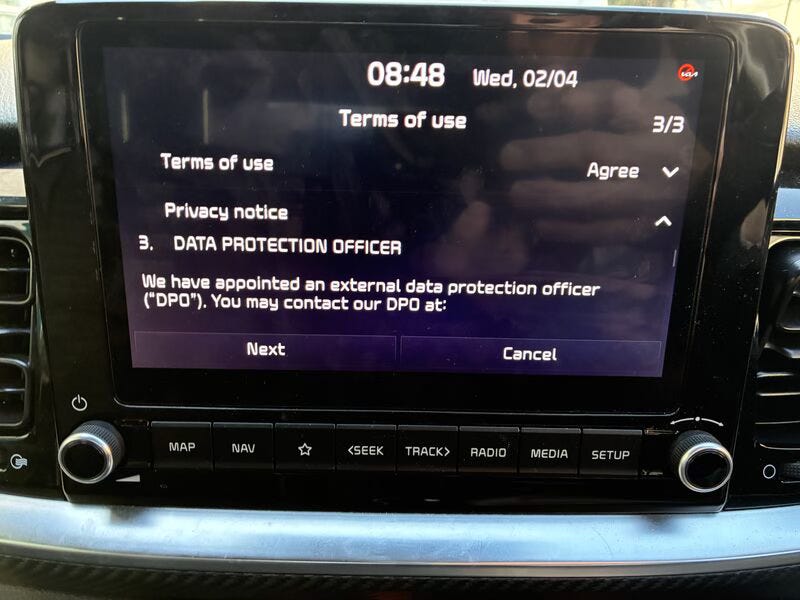

Yesterday, I got into my car, turned on the engine, and was faced with the following Terms Of Service and Privacy Policy update.

Are you kidding? I have to agree to this privacy notice, while reading 14 pages on this screen?

Yes, data protection is important. Yes, we need to build trust with customers. And yes, we need transparency about what data will be collected. About why and where it goes.

But what about the customer experience on the value of this stuff? On why this data is needed, and what I get in return?

Nope, I get 14 pages and 15 taps. And no, I can’t read, or make sense of, a 14-page privacy policy with this view.

Perhaps worse is the timing. They’re forcing me to do it at the exact moment I’ve just got in the car, and need to drive somewhere.

This stuff is so broken.

It was the wrong privacy notice, at the wrong time, in the wrong place.

It reminds me that at last count, Instagram’s and Facebook’s Terms of Service would each take over 10 hours to read. Why? Because these things are written by lawyers, for lawyers.

Transparency and compliance are not enough. We need digital trust, and urgently.

But we also need thoughtful customer experiences that build that trust over time. Not sledgehammer tick boxes at the most inconvenient moment.

Couldn’t this have been a short video? Like a flight passenger safety reel? It certainly would’ve been more engaging, perhaps even valuable. It might even, heaven forbid, have been a moment to help me understand the company values, and even encourage me to spend more with them.

Of course, my Personal AI will soon make sense of these boring and impenetrable data policies for me. And make recommendations on what to accept or not. What to share, or not.

But they will also do more. They will help me understand which brands are doing right by me, and which aren’t. Helping me navigate the consent maze that’s designed to keep me confused. And even helping me manage my data sharing with the car company.

And yet.

The recent fall in the Tesla stock price tells us that people really care about the values behind a company. And what could be more important than the principles and values of how a business uses your personal data?

And in a car, it’s the most private of your data.

Where you go. When you do it. Your behaviours and biometrics in the vehicle. Your insurability. There is a much longer post to be written about how the digital car is the Next Great Data Crisis. But that’s for another time.

For now, the auto industry needs to do better. To pay attention to what’s coming with Empowerment Tech and Personal AI.

And to improve those bloody Terms Of Service on the dashboard.

Because these crappy in-car experiences are destroying customer trust one small screen at a time.

As AI Agents learn to do more, we’re going to need a new kind of DPI: ‘Digital Private Infrastructure’

In 1965, Gordon Moore predicted that computers would double in speed every two years. Because we could fit more and more transistors on a chip. And we would do that exponentially.

It became known as ‘Moore’s Law.’

That remarkable idea has not only turned out to be accurate, but stubbornly consistent for the last fifty years. Many now say that Moore’s predictable path of computer performance is coming to an end, as we reach the limits of atomic engineering.

So what’s next?

It looks like there may be some sort of new ‘Moore’s Law for Agents’ emerging.

But it’s not about computer speed (although AI Agent improvements themselves are clearly developing exponentially). Rather, now it’s about time.

As AI Agents get smarter, it’s not just that they can do more complicated things. It’s that they can then string those things together to perform longer tasks for us.

Colin Fitzpatrick writes that AI agents are currently doubling the length of tasks they can do every 7 months.

“Today we are at around 1 hour with Claude Soonet - but it won't be long until AI is doing tasks that would take humans weeks or months.”

A few weeks ago, LinkedIn personality Zain Khan was getting excited about the latest high-performance AI Agent called ‘Manus’:

“Time to cancel your ChatGPT subscription. Manus automates HOURS of work in minutes. You can do all of the following with just one prompt.

It seems you can now do something in 15 mins that yesterday might have taken days. And it’s not just ‘fill in the form’. It’s now similar to asking a graduate student to solve a market analysis problem.

But look closely. Most of these tasks are about employee and developer productivity. So too with all the ‘co-pilots’.

What happens when we deploy these AI agents for people to use in their daily lives?

I’m talking about career, home life and money. I’m talking about wellness, health and fitness. Plus payments, booking and all the life admin around family and social.

There’s a lot of personal stuff in my day that takes hours that I’d rather delegate to my trusted Personal AI.

But it will take time.

When the combustion engine was first invented, the first thing we did was replace the mules carrying coal in the mines. We first used it inside business to drive efficiency.

The engine took longer to become useful for individuals, who later got cars and lawn mowers. We needed to wait until these machines became small enough, and safe enough, to use in the home. And until they became affordable.

Well, that’s about to happen with AI Agents.

These powerful new task engines are about to flip into the hands of people. Once they are small enough, safe enough, and cheap enough to use in our personal lives.

Today, inside the enterprise, we have lots of rules and regulations. Lots of governance, accountability and compliance teams. But once AI agents are unleashed with the masses, who is going to provide those guardrails? To manage compliance, security and privacy?

It’s a big and brewing problem.

For our personal AI agents to work properly - and to be trusted - they’re going to need lots and lots of our personal data. Which means we’re now talking about a whole new world of data protection.

And a new set of questions about how they work:

Where is the personal data being used by the AI Agent? When was it accessed? Who by?

How was it processed? Who got a copy?

Were the results biased? Can I trust them?

What’s the business model behind the automated AI actions?

Before we think about unleashing AI agents with real people and real customers, we’re going to need to level up on personal data governance. On security and privacy. And on digital trust.

You may have heard lots about ‘Digital Public Infrastructure’ (DPI). Described as the ‘digital railways and roads’ we need for civil society. Providing the public infrastructure for things like digital identity, payments and data exchange.

But with AI Agent platforms like Manus, do we really have the right levels of Digital Public Infrastructure on the side of the individual? On the side of the patient? The customer? The athlete? The parent?

I don’t think so.

Today, our data - and all that data sharing - is on the side of the business. Most of whom are the BigTech providers. Already dominating digital commerce, customer engagement and our social lives.

We are going to need a new type of DPI, and urgently.

We are going to need ‘Digital Private Infrastructure’ (‘DPRI’).

A new set of digital tools that live on the side of the individual. Based on open standards and open source. And - mirroring government-led DPI - enabling digital identity, payments and data exchange.

But this time it’s on our side, with our own AI Agents.

As AI tech gets more capable, and AI agents can do more complicated and longer tasks, we need to be sure we are empowering people in their daily lives. Not just hollowing out layers of knowledge workers inside our businesses for the sake of efficiency.

Digital Private Infrastructure is going to be one of the most important advances we can develop in this new AI age.

AGENT TASK LENGTH, MANUS, KHAN ON THIS DISRUPTION

OTHER THINGS

There are far too many interesting and important Customer Futures things to include this week.

So here are some more links to chew on:

Post: How Data Recombination Distorts Identity and Disempowers Humans in the Digital Age READ

Article: 30% of popular AI chatbots share data with third parties READ

Paper: Agentic commerce and payments: Exploring the implications of robots paying robots READ

Talk: Agents flip the internet from The Attention Economy to the Intention Economy WATCH

Post: People wearing prosthetic fingers to trick surveillance cameras READ

And that’s a wrap. Stay tuned for more Customer Futures soon, both here and over at LinkedIn.

And if you’re not yet signed up, why not subscribe: