Do you really want things to be hyper-personal…including pricing?

Plus: ChatGPT gets ads, and it’s as problematic as you’d expect... and much more

Hi everyone, thanks for coming back to Customer Futures.

Each week I unpack the disruptive shifts around Empowerment Tech. Digital wallets, Personal AI and the future of the digital customer relationship.

If you haven’t yet signed up, why not subscribe:

Customer Futures Meetup - London, 10th December

Are you curious about Personal AI, digital ID and digital wallets? Or are you already working on Empowerment Tech?

We’ll be at The Hoxton Hotel, Holborn in London (directions here) this Wednesday 10th of December - it would be great to see you.

From 4pm: Trusted AI Agents Meetup

We’ll be gathering in the afternoon for a new regular meetup on TRUSTED AGENTS.

To talk about all things AI Agents, Agentic Commerce, Personal AI and the agentic opportunity for business.

And we’ll be joined by some special guests to explore ‘MyTerms’, a new IEEE standard that means brands can soon accept Your T&Cs. Shared, of course, by Your AI. Exciting stuff.

From 6pm: Customer Futures Christmas Drinks

Then as usual, from 6pm we’re hosting our annual Customer Futures Christmas Drinks. A relaxed catch-up about all things digital wallets, digital ID and the empowered customer.

Looking forward to seeing you there!

Hi folks,

Our work here has never been more important. On customer empowerment. On digital access and inclusion. And perhaps on digital rights full stop.

Because it feels like we are reaching the elastic limit of how we manage our lives online.

Stretching what’s acceptable. Especially around giving digital access to our kids.

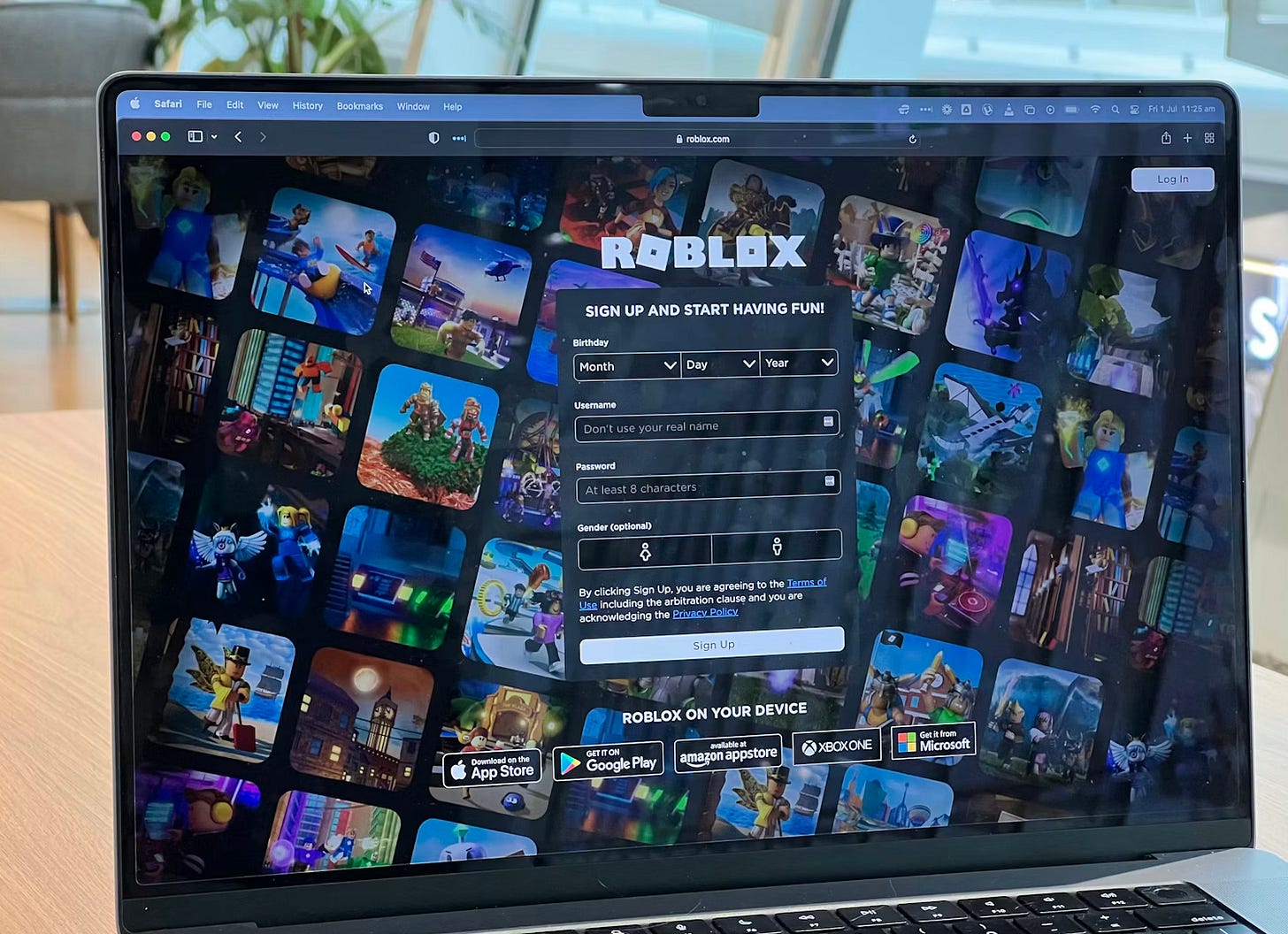

Last month, we heard from a Guardian journalist who pretended to be a 13-year-old girl on Roblox. Here’s what happened to her in just seven days:

Sexually harassed within minutes of joining

“Kids’ fashion game” hid secret sexual rooms

Older players cornered her and simulated sex acts

Parental controls on, still hit with bullying + violence

Kids pushed to spend real money for VIP areas + trolling

Players boasting about what they’d done to “girls”

Yes, we can and must have freedom of expression, and freedom of speech.

But we can’t have freedom of consequences.

It turns out that unmanaged, unfettered access to other people and content is this generation’s smoking.

The pattern: We don’t pay attention or realise what’s happening at the time, and the damage is being done while businesses mask the impacts, chasing revenues.

This is not about giving kids access to the internet. It’s about giving the internet access to our kids.

Yes, it’s terrifying. And we can’t just ‘shut it off’. So we must be thoughtful.

But here’s my bigger concern. It’s all about to get much worse.

Fake AI everything: how on earth can we now know who we’re dealing with online? In the old days (last year) it was about using AI to create a fake ID driving licence. Now it’s about completely realistic but fake humanoid chatbot conversations, fake videos, fake audio, fake payments, fake gaming and yes, fake relationships.

Personal AI agents: soon we’ll have bots that know us - and our kids - better than we know ourselves. Just wait until these platforms can access our emails, our calendars and go shopping for us. They’ll know what we do, with whom, when, how frequently, and what we like/dislike.

So what does Personal Gaming look like in a couple of years? And what happens to kids’ entertainment generally when it’s hyper-personalised and delivered in real-time?

You see, data protection, digital privacy and online safety aren’t the only challenges here.

It’s also going to be about digital intimacy.

Where we don’t just have to worry about other people. But rather, AI agents that look and sound just like people too.

So I strongly urge you to read the Guardian article about Roblox. Then talk to your kids. The change has to start now.

Yes, I’m excited about the future of being a digital customer. But it’s never felt more urgent to fix our personal digital infrastructure - and the digital accountability and protections for our kids.

So welcome back to the Customer Futures newsletter.

In this week’s edition:

Do you really want things to be hyper-personal…including pricing?

New paper on ‘OBO’: who’s really acting on your behalf?

ChatGPT gets ads - and it’s as problematic as you’d expect

… and much more

Grab a peppermint tea, and Let’s Go.

Do you really want things to be hyper-personal…including pricing?

We’ve long argued that ‘surveillance marketing’ has an impact. But we’ve long been ignored because apparently people want ‘more relevant ads’.

But what if it’s not ‘relevance’ we now get, but now ‘personalised pricing’? How do you feel about getting a different price to the person sitting next to you?

We now have to ask: Dear SalesBot, what characteristics are you using to make that decision? What personal data? And what information or behaviours have you deemed to be ‘good’ (lower price) or ‘bad’ (higher price)?

Daniel Levy nails it:

“When people say they don’t care about companies collecting their data, I can understand why. It’s because typically nothing dramatic happens we when click “yes” or “accept.” Life goes on and everything seems fine.

“What makes surveillance pricing particularly insidious is that it exploits the very surveillance infrastructure we’ve always been told is basically harmless.

“You search for a new pair of shoes or a vacation destination and for the next few weeks you’re fed a steady diet of targeted ads. No big deal and perhaps even helpful you might say.

“But things are changing quickly. Companies are using your data to build profiles on you in order to charge you an individualized price, not based on market trends but rather on a sophisticated algorithm’s decision about how much you personally can be squeezed.”

We can pretty much guess what comes next.

The ‘Surge Pricing For Everything’ Economy (how many other people want it at the same time?)

The ‘How Badly Do You Need This’ Economy (is this urgent?)

Or more quietly: The ‘Personal Surplus’ Economy (how much will you pay without noticing the increase?)

So that’s this week’s question: Will business get away with personal pricing as just another example of ‘market forces’?

It’s an uncomfortable truth.

That the more data we share, the more a company can treat us as individuals. The more they can offer us personalised services. Something just for us.

But personalised pricing is the ugly other side of the same coin.

Because being part of a wider, more generalised (and yes, sometimes anonymous) community, we get many benefits. We get to ‘pool’ our collective interests. Our bargaining power. And our collective risks.

But also pool our collective personal contexts.

When our individual circumstances are spread across the whole group, we can benefit from group pricing. One size fits all.

When it comes to pricing, that’s a Good Thing. A Fair Thing. And an Equitable Thing. We already have a bunch of regulations on fair pricing for a reason.

So in the rush to hyper-personalise everything, we must ask ourselves: is it really what we want?

New paper on ‘OBO’: who’s really acting on your behalf?

Earlier this year, I wrote a post about ‘OBO’. When someone, or something, acts ‘on your behalf’.

Specifically, I wrote about OBO for employees. Because it’s an increasingly important question for businesses. What really happens when an employee acts on behalf of the company?

Do you know if they are allowed to sign that document? Do you know if they have the authority to represent the business externally?

In today’s ever-more digital and remote workplace, IT, risk and compliance teams are having to look at digital risks from all sorts of new angles. Especially when employees change roles, delegate responsibilities, and act across departments and even between organisations all the time.

And yet most organisations still rely on static records to prove who’s authorised to do what.

Yes, Identity and Access Management (IAM) platforms keep internal systems secure. But they weren’t built to prove representation or authority outside the company walls.

When an employee signs a contract, approves a supplier, or files a regulatory report, the real question isn’t ‘who logged in?’, it’s ‘who were they representing?’ and ‘were they still authorised to act?’

So together with Digidentity, we’ve produced a full white paper on OBO, a new perspective on employee risk. And how to protect the business in the ‘moments that matter’.

Using new digital ‘OBO credentials’ to move trust to the moment of action. To when it matters.

Hope you enjoy it. We’d love your feedback.

ChatGPT gets ads - and it’s as problematic as you’d expect

In the age of Online Search, we have all learned to speak ‘keyword-ese’. The search hack that lets us shortcut our way to an answer.

dishwasher error E02 filthy fix

Type some words, get a list of links. And now, of course, an AI’s best guess at what we meant.

But what started as ‘organic search’ fast became results that were “promoted” or “sponsored.” Because Search is really a screen real-estate game: about first-page rankings, about traffic and eyeballs… and all about “intent.”

Which is why Search Engine Optimisation, SEO, became a billion-dollar industry.

But our new AI-powered chatbot tools are different. And they’re killing SEO in real time.

Why?

Because AI chat is intimate. Conversational. And context-rich in a way keyword-ese never was.

So now we’re all learning a new language: ‘prompt-ese’. Figuring out how to coax the right answer from a GenAI model through instruction, nuance and trial-and-error.

And so it was inevitable. Our AI chats are about to get ads too.

Where the AI platforms take much richer, stronger intent signals and match them to products and services that want to show up inside the customer’s conversation.

Not as a sponsored list. But rather as a product whisper inside a personal chat.

Now, add some AI memory (your last 500 prompts), 800 million monthly active users, and suddenly OpenAI becomes one of the richest data sources the advertising market has ever seen.

And it’s becoming urgent for ChatGPT. Even with 800M users every month, only about 35M are paying. Even their investor filings are saying the quiet part out loud: advertising is going to be a necessary revenue stream. And fast.

Tom Goodwin is asking the right questions:

“What does this mean for the information people will be prepared to share?

“Will ads persuade in this context?

“Will they look like sponsored search? Display? Something else?

“Will people just jump to Grok, Gemini or Claude if they feel uncomfortable?”

But the real story isn’t the ads. It’s the data protection dance they trigger. Because now the questions multiply:

What does consent look like when real-time bidding touches personal data from a chat?

What happens when an ad isn’t just a suggestion, but a two-tap trigger for an agent that can auto-book or auto-pay?

Where does all that personal data go? The history, the preferences, the payment details?

We’re not in Kansas anymore.

Introducing ads into ChatGPT - or frankly any of the mega-scale AI platforms - isn’t just another revenue line. It’s a deeper question about what personalisation really means. About control over the sales funnel, and new, important questions about data protection.

But most importantly, about whether we want this future at all.

OTHER THINGS

There are far too many interesting and important Customer Futures things to include this week.

So here are some more links to chew on:

Post: MyTerms are Your Terms READ

Idea: As Sovereign as Possible — But Not More Sovereign Than That READ

Article: ACDCs Should Underpin Digital Trust; Keep W3C VCs as Derivative Artifacts (a must-read if you’re interested in credential tech choices) READ

News: Chrome starts a trial for the Digital Credentials API for credential issuance READ

Post: Is OAuth enough for agent-based systems? READ

And that’s a wrap. Stay tuned for more Customer Futures soon, both here and over at LinkedIn.

And if you’re not yet signed up, why not subscribe:

Time to wake up. Probably not enough with automatic verification, rulebooks and regulation. Maybe we need personal walletcarrying AI-agents to defend us and our kids. Could be a killer product...