Your AI Assistant Can Now Testify Against You In Court, and ET Must Not 'Phone Home'

Plus: My hotel is going to need a digital ID too, and the end of the dream for self-sovereign identity

Hi everyone, thanks for coming back to Customer Futures.

Each week I unpack the disruptive shifts around digital wallets, Personal AI and digital customer relationships.

If you haven’t yet signed up, why not subscribe:

June Meetups - Barcelona, London and Sao Paulo

Why not join us in person to talk about all things AI Agents, digital wallets and the future of the digital customer relationship:

BARCELONA, SPAIN

6.30pm, Tuesday 10th June 2025

Main bar, Torre Melina, Gran Meliá Hotel, Av. Diagonal, 671, Les Corts, Barcelona (here)

I’ll be speaking at PhocusWright Europe (get in touch if you want to connect in person)

LONDON, UK

6.30pm, Wednesday 18th June 2025

Brewdog Waterloo, (underneath) Waterloo Station, 01 The Sidings, London SE1 7BH (here)

NOTE: This is the same day as the free Empowerment Tech Workshop in London on FINTECH - find out more and sign up here.

SAO PAULO, BRAZIL

7pm, Wednesday 25th June 2025

Central São Paulo - venue to be announced shortly

Hi everyone,

In case you missed it, we're launching a new series of live, expert-led sessions on how Empowerment Tech is reshaping entire sectors.

Join us, won’t you?

We’ll be covering everything from travel and money, to healthcare and education. From the regulations to the tech. And from digital wallets and data stores, to AI Agents and verifiable credentials.

We’re kicking off the series with a deep dive into Fintech.

On the 18th of June in London, we’re hosting a focused, invite-only workshop for fintech operators, CX leaders, identity pros, and wallet nerds. To unpack what Empowerment Tech means for finance, and what’s coming next.

Our first resident expert is Erin McCune from Forte Fintech. Bringing a wealth of experience and deep expertise around what’s changing across payments, infrastructure, and customer control.

To be clear, this isn’t a lecture.

It’s a hands-on, collaborative session to share insights, explore the big shifts, and connect with others making sense of the digitally-empowered customer.

When: 18th June 2025, 15:30–18:00

Where: Near Waterloo, London (venue confirmed after registration)

Who: Fintech operators, product leaders, identity pros and customer strategists

Attendance of these sessions is strictly limited, and registration is required. So grab your spot now.

More on the live ET series coming soon, and do get in touch if you’d like us to cover a specific topic, or in a particular spot. You can DM me here.

It’s all just another opportunity to unpack the future of being a digital customer. So welcome back to the Customer Futures newsletter.

In this week’s edition:

Your AI Assistant Can Now Testify Against You In Court

My hotel is going to need a digital ID too

Free customers are more valuable than captive ones

UK Information Commissioner talking Empowerment Tech

ET must not phone home

The end of the dream for self-sovereign identity

… and much more

Let’s Go.

Your AI Assistant Can Now Testify Against You In Court

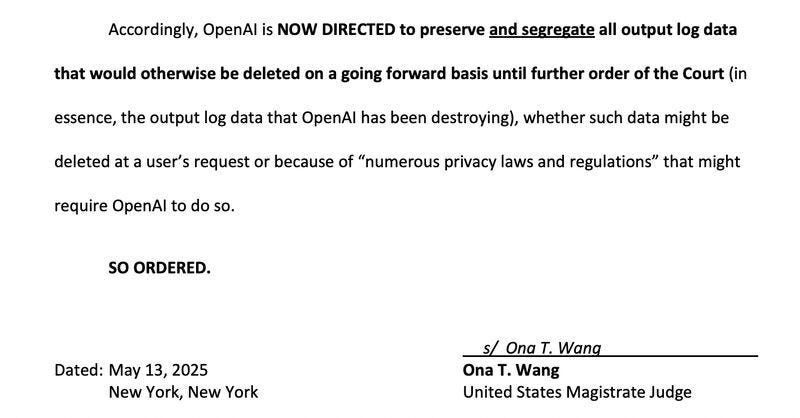

A US court has just ordered ChatGPT to preserve and segregate all chat histories. Including ‘temporary’ and ‘deleted’ ones.

It’s part of a legal fight between the New York Times and OpenAI. Arguing over whether people are using ChatGPT to get around the NYT’s paywall.

But.

It’s a stark reminder to ask yourself: what does your digital assistant actually know about you?

Try pasting this prompt into ChatGPT (credit to Huggingface’s CTO for the tip):

“Please put all text under the following headings into a simple document overview, with long-form summaries: Assistant Response Preferences, Notable Past Conversation Topic Highlights, Helpful User Insights, User Interaction Metadata.”

What it returns is a summary of you, as it sees you. Your preferences, your style, your profile.

Any surprises? How did it make you feel?

Because here’s the real issue. The more you interact with these powerful AI systems, the more they learn… and now can retain for the courts. Do you know, do you trust:

Where is that data?

Who can access it?

What can they do with it?

And now that a US court requires even private logs to be preserved, and your AI assistant can now testify against you in court, what happens next?

As Anjur Bannerjee says, it’s “a chilling example of why privacy-preserving tech is needed for the emerging flood of AI-powered applications.”

I use ChatGPT most days. But I’m ready to switch.

My hotel is going to need a digital ID too

Perplexity just quietly launched the next generation of travel search.

Apparently it’s not just accurate, it’s useful, even delightful. And it feels like magic. But it's also a warning shot, and should be sending shockwaves across the travel sector.

Here’s Simon Taylor’s take:

“Instead of scrolling through 847 hotels and their photos trying to spot a decent gym, I asked Perplexity: Find me a hotel in Amsterdam with a serious gym, walkable to conference venues, under €300/night.

“30 seconds later: Perfect recommendation. The exact hotel I actually use.

“But here’s the scary part for traditional travel sites: Perplexity made the experience better.

No infinite scroll.

No fake reviews.

No 'sort by price then realise the photos were misleading.'

No 'oh there’s no filter for gyms.'

“Just ask what you actually want. Get what you actually want.”

Yes, AI agents can automate all the things. But remember they can fake all the things too. So here come the hard questions:

How will we handle the unhappy path, when the booking fails, or there’s an issue with the payment?

How will travel providers know a booking isn’t fraud?

How will individuals know the hotel really exists?

Who’s on the hook when (not if) an AI gets it wrong?

What now happens to travel SEO, affiliate links and booking revenue models?

But just like when the customer moved over to mobile and social, this is an interface shift that’s quietly going to restructure entire industries.

And it’s way more than a disruption to ‘search’ and online travel agents. It’s also now about digital trust.

We’re going to need new ways to verify who we’re dealing with. A new approach to digital identity and digital reputation. And not just for travellers and passengers. But for their AI agents, and all the travel providers they interact with.

You see, my hotel is going to need a digital identity too. So will every venue, every ticket, and every booking system.

We’re not in Kansas anymore.

Free customers are more valuable than captive ones

Over a decade ago, I heard a brilliant phrase from Doc Searls, author of The Cluetrain Manifesto and The Intention Economy (both essential reading, by the way):

“Free customers are more valuable than captive ones.”

It sounds odd at first. But when you really think about it, it’s so simple. And so important. Because if you force a customer to stay, they’re not ‘loyal’ anymore. They’re just stuck. Good luck getting a great NPS score when your customer can’t leave.

Now flip it.

A free customer - who could leave but chooses not to - is exponentially more valuable. They want to be with you. They’ll refer others. They’ll probably spend more money with you over time. And they’ll cost far less to keep.

But acquiring, verifying, onboarding, and then locking in a new customer? That’s expensive.

So the best brands don’t chase retention by locking people in. Rather, they create value, they create memorable experiences, and they create reasons to stay.

This week, I saw another excellent example of a company just blindly getting lock-in wrong: Lovable.

As Madalin Chirila puts it: “When a $1.5B product makes it hard to leave, that’s not retention - that’s friction.”

Because Lovable has:

No clear or easy ways to cancel the plan or subscription - only offering a ‘downgrade’

No visual indication of how many cancel steps are involved - the experience is deliberately obscure and unintuitive

No clear way to delete your account, you have to get in touch, yet they offer no clear email, no support form, no button

What a load of BS.

My personal favourite example is the UK newspaper The Times. If you want to leave their monthly digital subscription, you have to call a phone number. That’s right, in 2025, to leave a digital-only service, you have to speak to a real human. Not to discuss the product, or to chat about ways to improve the service, but so that they can try to talk you out of leaving.

It takes about 10 minutes to get out of the contract. And then of course, you’re spammed monthly to reactivate the account. And you’re also forever checking your bank statements to check they’ve actually stopped taking payments.

(Last year I caught them renewing an old, ‘grey subscription’ - the kind you stop but not completely, so they keep billing you and hope you don’t notice - but only after 6 months… So frustrating.)

Sadly, it’s just another dark pattern.

They make exit just hard enough so that a few won’t bother. And then hope no one notices. Too many companies now rely on this kind of distraction and noise. They bank on your exhaustion.

So Doc is right. Free customers are flat out more valuable.

I’ll say it again, but differently: free customers are CHEAPER. Because if I trust the brand, I’ll stay. I’ll spend more, and I’ll tell my friends.

Jeff Bezos once said that advertising is just the cost of having a crappy product. And if you have to prevent customers from leaving? Then your product isn’t just unremarkable. It’s crappier than you think.

UK Information Commissioner talks Empowerment Tech

The UK’s data protection regulator, the ICO, has been weighing in on the national AI and biometrics strategy.

And some of the debate has centred on big questions: Will AI adoption require new human rights legislation? Is privacy a precondition for trustworthy AI?

But this line stood out, from the Government’s own expert AI working group:

“The Information Commissioner (John Edwards) put it simply: if AI is doing something for people, the risk is lower. If it’s doing something to people, that’s where scrutiny is needed.”

Now we’re talking. Because the big issue with AI isn’t just about data protection and digital trust. It’s about intent. Whose interests is the AI really serving? What are the incentives behind the system?

Thanks to James Robson for the pointer.

ET Must Not Phone Home

There’s a fundamental flaw in some of the new digital identity systems being proposed around the world. And you need to be paying attention.

It’s to do with how your data is verified when you share it, and how, if implemented a certain way, they can enable near-perfect surveillance.

With certain digital ID setups, every time you share your personal data - whether to prove your age, unlock a car, or open a bank account - a quiet signal can be sent back to the original issuer of the data.

It matters because a company or government can soon quietly track what you are up to online.

A super cookie for real life. A bit like Facebook’s ‘tracking pixel’… but built into your driver's license.

Privacy and security experts around the world are now ringing the alarm, specifically about a credential standard called the Mobile Driver’s License (mDL). The issue is called “phoning home,” a feature that, even if not used at first, can be quietly turned on later.

And if it can be turned on, you can be certain that in the future, someone will turn it on.

That’s why I’ve signed the ‘No Phone Home’ open letter, alongside a global community of privacy, identity, and digital rights advocates.

Here’s the heart of the message:

“Identity systems must be built without the technological ability for authorities to track when or where identity is used… Even if inactive, this capability will eventually be used. We call on authorities to prioritize privacy and security over ease of implementation.”

We simply cannot build Empowerment Tech - tech that puts people first - on foundations that quietly enable control, surveillance and tracking. And we can’t say we’re giving people digital freedom while baking in the mechanisms of real-world monitoring.

Put simply, we can’t have Empowerment Tech without empowerment.

As Edward Snowden puts it:

“Arguing that you don’t care about privacy because you have nothing to hide is no different than saying you don’t care about free speech because you have nothing to say.”

This is about protecting our basic digital freedoms. About ensuring the infrastructure we build respects the people it’s meant to serve. And about giving people the right to prove who they are, without leaking where they’ve been.

No silent signals. No hidden pings. No phoning home.

If we get this wrong, we risk turning digital wallets into surveillance tools. For those who care about the details - and boy, that should be everyone - I not only recommend reading Kim Hamilton Duffy’s posts about it:

Even the Experts Didn't Know (read this one first)

Is Server Retrieval Actually Optional? The Investigation That Revealed More Problems

…but I also suggest you also read the comments under Kim’s LinkedIn post about the debate. Lots of others have written brilliantly too, just look up #nophonehome.

My take:

Empowerment Tech (ET) Must Not Phone Home.

(If you don’t get the reference, go and watch the film ‘E.T.’ immediately, where an alien tries to get back to his spaceship by “phoning home.” Sweet in the movie. Creepy when it’s your digital ID doing it behind your back.)

Let’s build an empowered future where our digital tools work for us. Not against us.

PHONE HOME OPEN LETTER, KIM’s LINKEDIN COMMENTS

The end of the dream for self-sovereign identity

While we’re at it, let’s talk about Self Sovereign Identity, or ‘SSI’. And the very same values we’re trying to protect with ‘No Phone Home’ above.

The SSI movement, which started around 2015, gave birth to Digital ID Wallets as we know them today. Not to mention, much of the cryptography behind verifiable credentials.

But some say we’re at risk of losing the core ideas around SSI, including ‘privacy by design’. It’s worth reading a recent article by Nathalie Launay. Asking if privacy by design is over if we allow ‘re-centralisation attempts’ by BigTech and others in the new digital wallet market.

Here’s her punchy conclusion (bold mine):

“Although any digital identity wallet can comply with consent, data miminisation, persistence, access, existence… any recentralisation of the digital wallets ecosystems or infrastructures […] will destroy the 3 other pillars of a Self Sovereign IDentity as defined by Cristopher Allen: Transparency, unlinkability and the full control of the data holder.

“Such attempt of recentralisation of initially decentralised/distributed digital ecosystems cannot comply with the GDPR privacy by design principle […], and will also mean new single points of failure with cybersecurity risks…”

Perhaps it’s no coincidence that in my chats this week about governments and BigTech pushing digital wallets and reusable ID, I’ve heard the same line more than once:

“The road to hell is paved with good intentions.”

OTHER THINGS

There are far too many interesting and important Customer Futures things to include this week.

So here are some more links to chew on:

News: Android now supports digital verifiable credentials READ

Post: The UK government wallet and the liability conundrum READ

Article: The most disruptive pricing models for AI READ

Idea: Doing for AI Agents what DNS did for the internet: AgentDNS READ

Report: Mapping the Progress of EU Digital ID Wallets READ

And that’s a wrap. Stay tuned for more Customer Futures soon, both here and over at LinkedIn.

And if you’re not yet signed up, why not subscribe: