The invasive digital profile you never agreed to, and winning at AI agents with user experience

Plus: Our blindspots with MCP (it’s early and complicated), and why do we pick the worst digital tool for the job?

Hi everyone, thanks for coming back to Customer Futures.

Each week I unpack the disruptive shifts around Empowerment Tech. Digital wallets, Personal AI and new digital customer relationships.

If you haven’t yet signed up, why not subscribe:

New Customer Futures Meetups

Exciting news. We have two more Customer Futures meetups coming up!

Join us for an informal catch-up over a cranberry juice or beer, where we’ll be talking about all things Empowerment Tech. AI Agents, digital wallets and more. It would be great to see you.

BERLIN: 6pm, Tuesday 6th May 2025

2nd floor Bar, Motel One, Alexanderplatz, Grunerstraße 11, Berlin (here).

It’s the first night of the EIC conference (DM me if you want to grab a coffee)

BARCELONA: 6.30pm, Tuesday 10th June 2025

Main bar, Torre Melina, Gran Meliá Hotel, Av. Diagonal, 671, Les Corts, Barcelona (here)

I’ll be speaking at PhocusWright Europe (let me know if you want to connect in person)

Hi folks,

Question of the week: Can our understanding of what’s unfolding with AI Agents keep up with the actual use of AI, and all the latest LLM developments?

I don’t think so. There’s too much happening, in too many places, across too many topics, to keep track.

But there’s a deeper point to make. We are going to need new solutions to new problems. Finding answers to questions we haven’t even asked yet. About things like protecting children, like respecting privacy, and how to handle the coming wave of ‘AI intimacy’.

Yes, we can see some of it coming. But we can’t yet see the impact that AI Agents are going to have. This is a ‘double-blind problem’, and it has a name.

It’s called the Collingridge Dilemma, where:

You can’t predict the impact of a technology until it is extensively developed and widely used

You can’t then control or change a technology once it has already become widely used

It puts us in a tough spot if we want to be careful about AI Agents using personal data. Where right now, most AI innovation is working on the principle of ‘forgiveness not permission’. But what if data protection needs ‘(customer) permission not forgiveness’?

Ah, but there’s good news!

OpenAI have committed to "…work to remove personal information from the training dataset where feasible... …and minimize the possibility that our models might generate responses that include the personal information of private individuals."

Uh-huh.

And good luck to all the big tech AI teams who have already shut down their AI ethics teams.

We can only hope that those building these powerful new AI Agent platforms are paying attention. To customers, not just to tech. To value, not just to training data. And to real security and privacy governance, not just to flimsy privacy policies.

It’s why the Customer Futures network matters so much. We certainly don’t have all the answers. But together we’ll know people that do.

For now though, and as ever, it’s all about understanding the future of being a digital customer. So welcome back to the Customer Futures newsletter.

In this week’s edition:

Why do we pick the worst digital tool for the job?

Using GenAI in 2025 - this time, it’s Personal

Winning at AI agents with user experience

Our blindspots with MCP (it’s early and complicated)

Going for dinner, and leaving with an invasive digital record you never agreed to

… and much more

So grab your coffee or fruit smoothie of choice, a comfy chair, and Let’s Go.

Why do we pick the worst digital tool for the job?

Another excellent post from Tom Goodwin on why, when we have so many customer channels, do we often use the worst one?

“Phone calls are terrible for reading out personal information or completing forms, they are abysmal for non real time things.

“Paper forms are terrible for anyone that needs to actually read what people wrote, and are woefully inefficient.

“Increasingly text, instant message or even good old fashioned email are the way ahead, perhaps triaged by AI, but somehow people want to sell in Chatbots, call centers, or self serve systems that don't work.”

Spot on.

But there’s another hidden and important point here. It’s not about “AI or Not”. Or which customer channel or not.

It’s that businesses are largely rubbish at understanding customer context.

To recognise how the customer might be feeling. How urgent the matter is. What data needs to be shared. And therefore which channel might be best.

A chatbot, email, WhatsApp or phone call all do different things. A call centre behaves differently to an app.

Brands grandly talk about ‘multi-channel’, and ‘omni-channel’. But really what we need are smart digital tools - and indeed AI - to help customers navigate to the right channel at the right time.

And for the business to be listening, and ready to respond in that right channel, themselves using AI to make sense of the customer’s own journey. Are they on the unhappy path and in distress, or just making a trivial account change? Is it about a spiky complaint or a VIP upgrade?

It’s time to reimagine the role of the Chief Customer Officer.

Not because they understand ‘data’. Or because they’re responsible for the end-to-end customer experience (though they should).

But because the CCO now needs to be responsible for how the business responds to customers showing up with their own digital tools. Digital wallets. Data stores. And yes, AI Agents.

We can learn from recent history about using ‘the right digital tool for the job’. This time it’s about employees.

A few decades ago, it was only big businesses that could afford to offer mobile phones to their workers. But then the iPhone showed up, and employees started to have better phones at home than they were given at work.

And so businesses had to respond. They ended up creating ‘Bring Your Own Device’ policies. Where you could be approved to use your (better, smarter) personal mobile phone for work.

The best tool for the job.

The same is about to happen with AI.

Today at work, we get given co-pilots, email assistants and meeting transcription services. But soon our Personal AIs will be better for many tasks.

They’ll understand our learning styles. How we prefer to communicate and when. They’ll know how productive we are in different settings. And they’ll be tuned into the background noise of our daily lives. How to handle the digital chaos that surrounds our 9-5.

So will businesses agree to ‘Bring Your Own AI’?

Not likely. They barely survived the security and data risks when employees brought their own internet-connected devices into the workplace.

And it’s about to get messy.

What happens if my AI at home is better than the one I get given at work? You can bet that employees will use them anyway.

Bonus points if you can answer this: what happens to your AI co-pilot at work - who knows you the best, learns your style, your productivity hacks, and how to make you a super-efficient employee - when you leave the job?

Do you get to take that employee data with you? All that highly personal information about your productivity? All that personal support? That intimate employee profile?

Nope.

We grandly talk about ‘portable learner records’, but what about our intimate AI assistants that are helping us learn in the first place? Helping us do our best work?

Tom Goodwin is right about using the Best Digital Tool For The (Customer) Job. But it also means we need to rethink which AI we use, when, and why.

Using GenAI in 2025 - this time, it’s Personal

Marc Zao-Sanders and HBR have produced a compelling report into how people are actually using AI.

Not just following the hype, but researching which apps are actually being used and why, and digging into the underbelly of conversation around them.

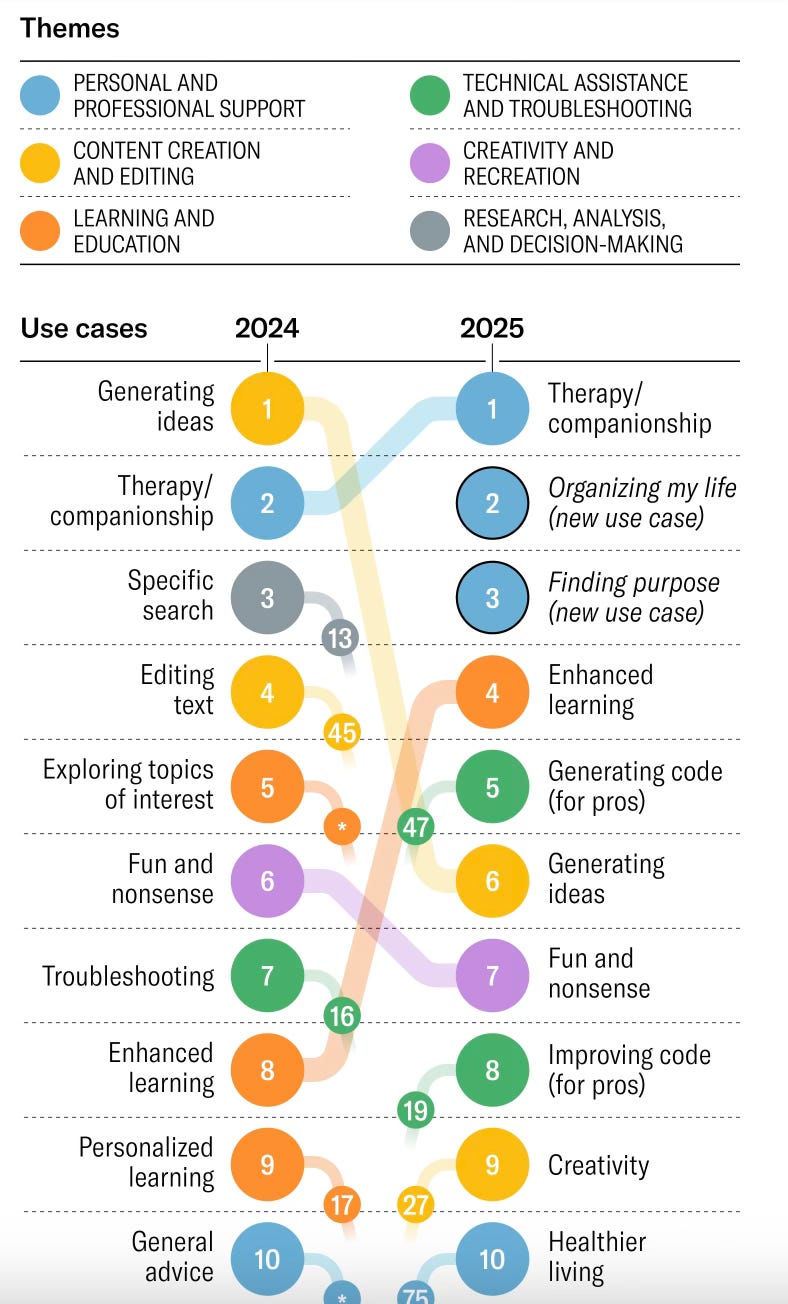

As always, a picture paints a thousand words. Just look at the results.

First, notice how much change there is. New use cases leaping into the top 10, and many dropping away.

But I noticed something else.

The blue category is ‘Personal and Professional Support’. Yet in the top 10 use cases, that really just means ‘Personal’, not professional.

In fact, the top 3 (and 4 out of the top 10) are all about Personal AI. Therapy, organising my life, finding purpose, and healthier living.

Only two in the top 10 are about software and development (green). Which if you believe the explosion of posts online, are all about taking over software, vibe coding and replacing jobs.

I don’t know who needs to hear this, but I’ll say it again:

With Personal AI, digital privacy isn’t going to be the problem. Digital intimacy is going to be the problem.

Our AIs knowing us better than we know ourselves.

I don’t know about you, but I’m already feeling a bit like a boiling frog. Co-piloting my way through the day. Helping with a problem. Organising tasks. Making sense of dense datasets and content.

Anyway, this is all to say, pay attention.

Not just to the cool new model or app or assistant. But how it’s being used at scale.

We need to pay attention to

What, and who, are these models are trained on?

What personal data is leaking - in fact gushing - into these platforms? (Do you know where your data is, let alone what data is captured?)

What security, privacy and data governance guardrails are in place?

Fine, move fast and break things.

But just be careful when 80% of the top things you’re breaking are Personal.

Winning at AI agents with user experience

Microsoft have just published some excellent design principles for designing AI Agent systems.

Why?

Well, that’s interesting. At least interesting because it’s Microsoft. It seems they want to “emphasize the value of human-centric principles amid rapid advancements in AI”.

Brilliant stuff.

You can almost feel the gentle hand on the shoulder from Mustafa Suleyman.

He’s the ex-DeepMind founder who set up Inflection.ai and ‘Pi’, one of the breakthrough Personal AI platforms. All before he and his entire team were personally acqui-hired by Microsoft CEO Satya Nadella, to head up the corporation’s Personal AI plans.

There’s some real juice in here to squeeze from their ‘Agent UX Design Principles’:

Agent (Space): This is the environment in which the agent operates. These principles inform how we design agents for engaging in physical and digital worlds.

Connecting, not collapsing: help connect people to other people, events, and actionable knowledge to enable collaboration and connection.

Easily accessible yet occasionally invisible: an agent largely operates in the background and only nudges us when it is relevant and appropriate.

Agent (Time): This is how the agent operates over time. These principles inform how we design agents that interact across the past, present, and future.

Past: reflecting on history that includes both state and context.

Now: nudging more than notifying.

Future: adapting and evolving.

Agent (Core): These are the key elements in the core of an agent’s design. These principles inform how we design the foundation of individual agents and how they might interact with other agents.

Embrace uncertainty but establish trust.

Transparency, control, and consistency are foundational elements of all agents.

And then there’s the punchline:

“As with any product development process, it is critical to start with an end-user/customer problem and then brainstorm possible solutions that may or may not involve the use of AI. This helps to ensure we continue to center human needs as we create new user experiences with agentic systems.”

They put it more simply at the top of the article. “Remember: plenty of customer problems do not need AI”

Well quite.

And for the use cases that do need AI, the user experience is going to separate the winners from the losers.

I’ve also seen some excellent AI experience design work coming out of London design studio ‘Else’. Working on AI Agents and how brands can seize ‘Ownable Moments’. They’ve won awards for it.

MSFT’S AI PRINCIPLES, ELSE DESIGN

Our blindspots with MCP (it’s early and complicated)

Stuart Winter-Tear is bullish on the potential upside of AI Agents. But he’s also on a rip around the potential issues. Especially around Anthropic’s Context Model Protocol (MCP), which he reminds us is still very early in its development.

Here’s a snippet from a security research paper he recently dug up on the gaps and risks around MCP.

On security:

Name Collision – Malicious servers using similar names to trusted ones to deceive users.

Installer Spoofing – Fake or malicious installers introducing backdoors or malware.

Code Injection/Backdoors – Hidden malicious code in MCP server components or dependencies

Post-Update Privilege Persistence – Retained privileges after updates leading to unauthorised access.

On governance:

Lack of Centralised Security Oversight – No unified authority to enforce security policies or vet server components.

Inconsistent Authentication/Authorisation Models – Varying mechanisms across MCP clients and servers cause potential access control loopholes.

Absence of Formal Reputation Systems – Users have limited ways to assess the trustworthiness of MCP servers or installers.

And that’s before you get to data protection, privacy and ethics. Which, by the way, are about security and governance too.

These are blindspots for sure. So who’s working on it?

It reminds me of the old proverb, “There is so much to do, and so little time, we must go slowly.”

Going for dinner, and leaving with an invasive digital record you never agreed to

The head of data privacy at US bank Wells Fargo recently posted about his experience trying to find parking on a night out. And being forced by the car park into a data relationship he didn’t ask for, and didn’t consent to.

“I pulled up to the gate expecting the standard paper ticket. Instead, a screen lit up and Vend Park asked for my phone number. There was no skip button, no explanation. Just an entry field, a closed gate in front of me, a line of cars behind me, and hungry kids in the back seat. I was stuck.

”I entered my phone number. Instantly, and without warning, an unseen camera scanned my license plate, matched it to my phone number, and the gate lifted.

“That was it. No consent screen. No privacy policy. No way to review what I’d just agreed to. The transaction was over before I could think through what I’d given up.”

“My phone number and license plate are now linked in a commercial system I’ve never heard of. From that, a profile likely will be built. Not just where I parked last night, but now how often I park there, how long I stay, and what kind of cars I drive. If the garage uses a shared platform, as many now do, that profile will follow me across other garages, other cities, even other services.”

“Location data, visit patterns, inferred income, inferred medical diagnosis, habits, and more. All created without notice. All retained without consent.”

The post now has over 700 ‘likes’ on LinkedIn and over 150 comments. It has, as they say, gone viral.

Why?

Because it’s touched a nerve. Because we’re all fed up with this approach to data collection. As Daniel puts it:

“The system captured my data as a condition of entry, as always offering me a modicum of convenience in exchange for something far more valuable: persistent traceability.”

A few weeks ago, I posted about a similar example. Being asked (forced) to accept my car’s T&Cs. On a tiny screen, and just as I switch on the engine, and needed to get somewhere. Terrible timing, terrible experience.

But at least I was given notice that it was happening.

The funny thing is that both companies - VendPark and Kia - think they are making money WITH data. What they are missing is that they should be making money BECAUSE of data.

The companies that will win will be those that build trust with data. Where the customer will value that data sharing, and will share more because of it.

The companies we trust will have a longer-lasting, more valuable customer relationship. And with access to more valuable customer data, they can better personalise services, create more value, and make more money.

You see, VendPark and Kia have it wrong. They see it as a trade-off. Data value OR digital trust. But it’s actually about data value AND digital trust. More trust means MORE value.

Why? Because I won’t be accepting those car terms any time soon (and most likely will give them fake info when I have to).

But Daniel has it worse. He went to dinner. And left with an invasive digital record he never agreed to create.

OTHER THINGS

There are far too many interesting and important Customer Futures things to include this week.

So here are some more links to chew on:

Article: Tim Berners-Lee on ‘Building AI That Benefits Everyone’ READ

Post: What’s the difference between Agent-To-Agent vs. MCP vs. AgentForce? READ

Article: The False Intention Economy - How AI Systems Are Replacing Human Will with Modeled Behavior READ

Post: Agentic identity isn’t a security feature - it’s a structural reframe of how identity works READ

News: Meta’s 2024 ‘Consent or Pay’ breached the EU Digital Markets Act and triggers €200M fine READ

And that’s a wrap. Stay tuned for more Customer Futures soon, both here and over at LinkedIn.

And if you’re not yet signed up, why not subscribe: